The Evolution of Deepfake Technology: Understanding the Dangers

In today’s era of modern technology, the evolution of deepfake technology has brought amazing changes and dangers to our cyber realm. As an IT company committed to tech innovation and cyber safety, it’s important to shed light on the dangers posed by deepfakes and how users can navigate through the digital landscape securely.

Understanding Deepfake Technology

Deepfake refers to synthetic media generated using deep learning techniques, where Artificial Intelligence (AI) algorithms are trained to replace someone in a video or audio clip with another person, The result of this synthetic media generated often portrays something that doesn’t occur in reality. Initially, these technologies were seen as fascinating tools for entertainment and creative expression. However, their applications have grown exponentially, encompassing various industries, including entertainment, politics, and even cybercrime.

The Dark Side of Deepfake

Cybercriminals and fraudsters have swiftly capitalized on deepfake technology, utilizing it for malicious purposes. From spreading false information to impersonating individuals for financial fraud or extortion, the implications are profound and potentially devastating. A recent advisory from the Cyber Security Agency of Singapore (CSA) highlights the rising threat of deepfake scams, where fraudsters impersonate government officials or business executives to deceive victims into transferring funds or disclosing sensitive information.

Threats to Vulnerable Populations

One of the most concerning aspects of deepfake technology is its potential to harm vulnerable populations, including children and the elderly. With children being more susceptible to manipulation and exploitation online, the danger of deepfake content poses a significant risk to their safety and well-being. Similarly, elderly individuals, who may be less tech-savvy, are more likely to fall victim to false information or fraud through deepfake technology.

One effective way to educate them on the dangers of deepfake technology is to encourage conversations by sharing related news or reports. Show them examples, and teach them how they can verify the content before forwarding them to anyone else. It is important to remind them that they can turn to someone for help if required.

Distinguishing Reality from Deception

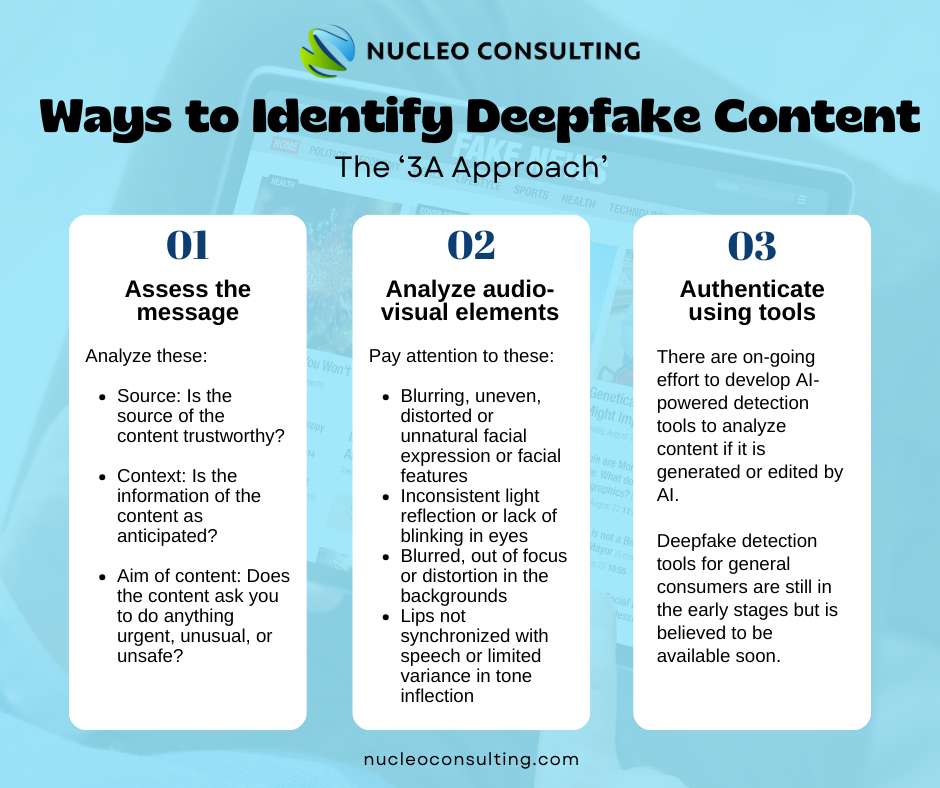

Deepfake contents have become very common these days. It is important for members of the public to be vigilant and be equipped with the necessary knowledge to tell the difference between authentic and manipulated media. While detecting deepfakes can be challenging, there are red flags to look out for, such as inconsistencies in facial expressions, unnatural movements, or artifacts in the audio or video.

- Assess the message:

- Source: Is the source of the content trustworthy? Official organizations, institutions, or individuals who own the content directly are examples of trustworthy sources.

- Context: Is the information of the content as anticipated? For example, it is unlikely for Singapore public officials to advise members of the public to invest in third-party investment schemes, and therefore is a sign that the content may be manipulated.

- Aim of content: Does the content ask you to do anything urgent, unusual, or unsafe? Clicking on malicious links, downloading unknown third-party applications, or providing your personal information are all common red flags to take note of.

- Analyse audio-visual elements:

- Images & Videos: pay attention to the facial features, expressions, and eye movements. Does anything seem unnatural, blurred, uneven, or distorted?

- Audios & Videos: Listen carefully and check if the lips are synchronized with the speech. Look out for the difference in background noise or limited variance in tone inflection.

- Authenticate using tools:

- Internationally, there are ongoing efforts to develop AI-powered detection tools to analyze content for signs of AI generation or editing. While techniques like pixel analysis are used to detect inconsistencies, deepfake detection tools for general consumer use are still in the early stages. However, content provenance techniques, which verify data origin and authenticity, are gaining maturity, with companies like Meta and OpenAI planning to include metadata tags or ‘watermarks’ to indicate AI-generated content. Users can look out for these tags and labels to better understand the nature of the content once it is available.

By following the 3A approach, individuals can effectively detect deepfakes and mitigate the spread of falsified content.

Empowering Education and Awareness

In conclusion, combating the dangers posed by deepfake technology requires a varied approach incorporating both technological solutions and educational initiatives. By raising awareness among vulnerable populations, such as children and the elderly, about the existence and implications of deepfake content, we can empower them to navigate the digital landscape safely. Furthermore, fostering digital literacy and critical thinking skills is paramount in equipping individuals with the ability to differentiate between genuine and manipulated content.

Team Nucleo remains committed to harnessing the power of AI for positive societal impact while advocating for responsible usage and safeguarding against potential risks. Together, we can mitigate the threats in the ever-evolving digital landscape with vigilance, resilience, and a commitment to fostering a safer digital future for all.

Artificial Intelligence | AI | Deepfake | Technology | Tech Innovations | IT Tips | Cybersecurity | Scams